Archive: Fluent Newsflash [November 2024 #1]

If you've ever tried ChatGPT, Gemini or Copilot before, you know the feeling after getting a wrong answer. It might be amusing, but it impacts your trust in anything else the 'black box' spits out.

Fluent isn't built like a casual chatbot. It doesn't play a zero-sum game of 'answer at all costs." This week, we wanted to share the three ways Fluent's AI approaches every query it receives, to protect you from wrong answers and hallucinations.

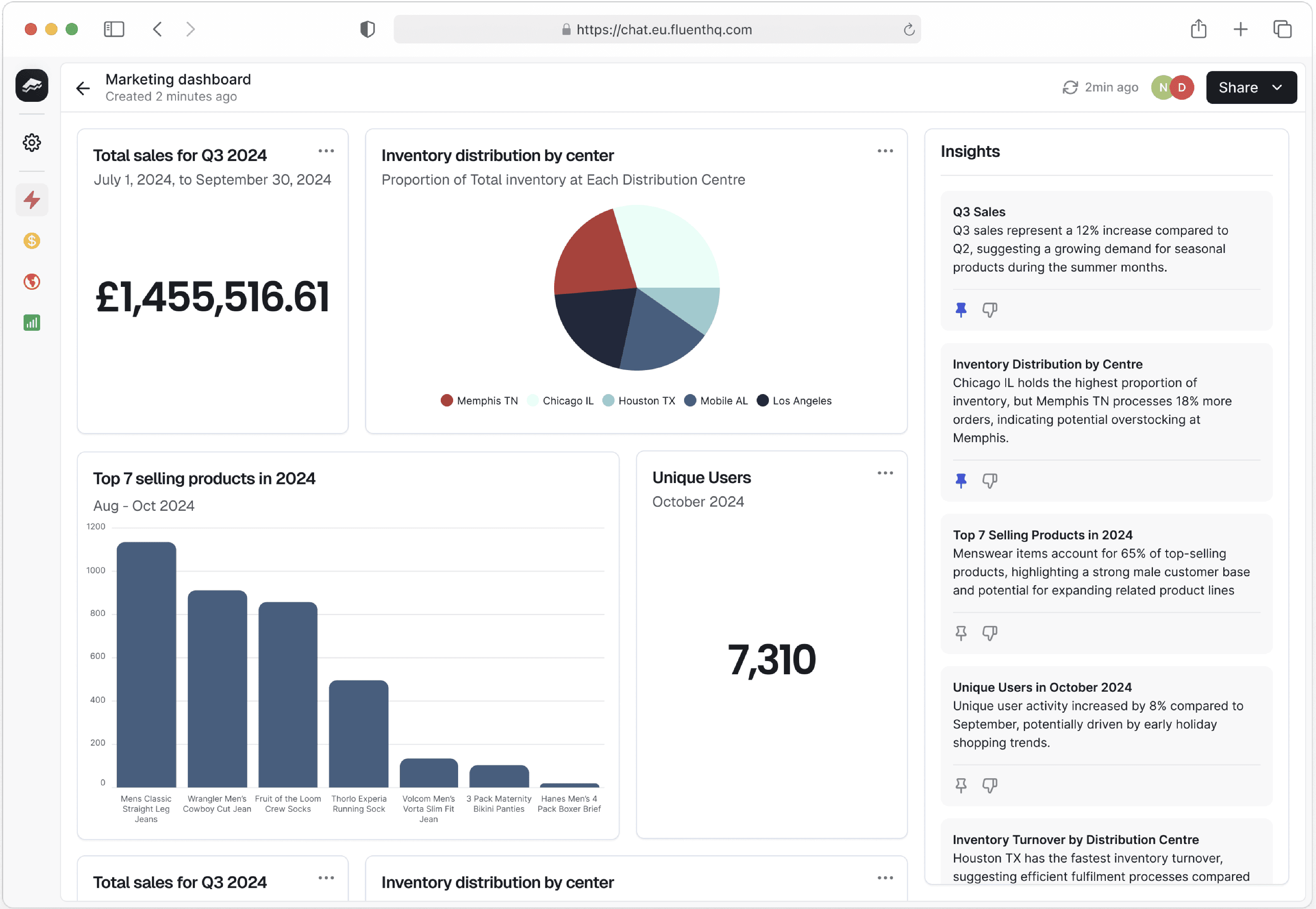

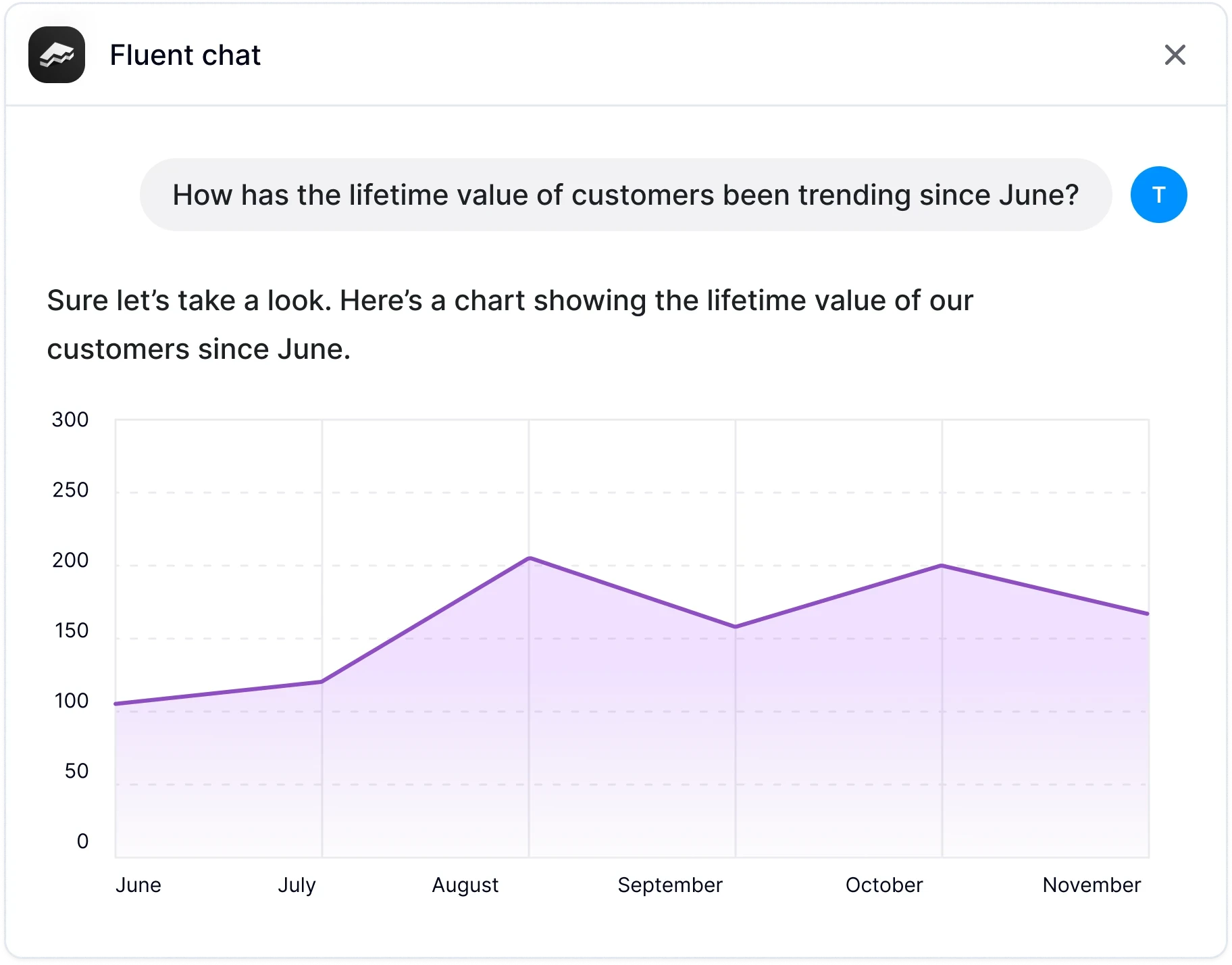

Path #1: Fluent Can Answer

Ask a question in plain English.Fluent understands the context, intent and ambiguity.Fluent generates an answer or visualisation.

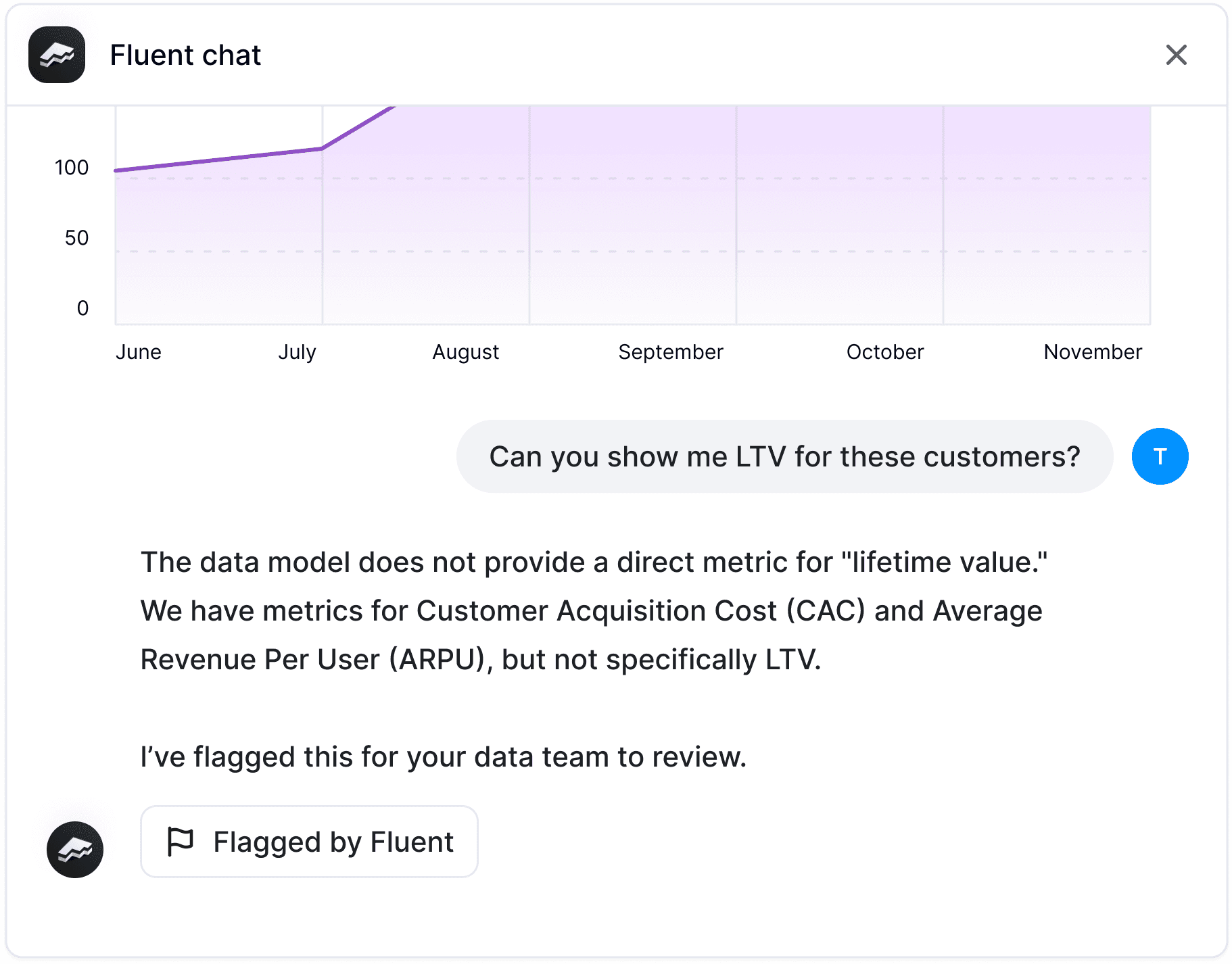

Path #2: Fluent Can't Answer

With some edge cases or net-new queries, the LLM will require additional metrics to answer.

You can simply flag the query, alerting the data team to hop in and add the required logic.

Path #3: Fluent Won't Answer

If data access is denied, users are guided to request a permission change or data connection from the data team, if they believe it should be. And that's it. No guessing games, no hallucinations.

Knowing when to say 'I don't know' is critical for LLMs within a business. It takes more work to train an AI this way, but the interactions we're seeing end in an flaggable error message - rather than a best guess - are winning over a lot more users.

If you're keen to learn more about how our 'honest' AI works, visit our new product page or drop us a line for a 15-minute demo.

Hungry for more?

There's a ton of questions asked about BI and AI, and how they can work together. Explore some new content on the subject below.

Work with Fluent

Put data back into the conversation. Book a demo to see how Fluent can work for you.

10 February 2025

Introducing dashboards

3 February 2025

Fluent Text-to-SQL: Fast, Accurate AI Data Querying

Stay up to date with the Fluent Newsflash

Everything you need to know from the world of AI, BI and Fluent. Hand-delivered (digitally) twice a month.